If you’ve got an app that even tangentially touches NodeJS/NPM (really if you have eyes and ears) you’ve probably seen these.

I am not going to spend a lot of time writing about these particular pieces of malware. The posts above such as that from my mate Paul McCarty will do the job much better than I ever could.

I am also not going to talk much about how to respond to this directly. To those affected I wish you a relatively peaceful IR process.

Rather, I want to spend my words talking about how we as engineers, can move forward from this, providing some steps for short term solutions, plans for medium term solutions and then thought pieces about longer term plays.

Key thoughts:

Our model of dependency trust is broken and has been broken since the foundation of package managers

Industry standards for managing this risk are fundamentally faulty.

Manifest-based composition analysis is at best a great distraction from improving your security posture

Vulnerability management is less about managing vulnerabilities and more about managing expectations, CVSS/EPSS you name it, they are all largely awful. The number of CVE’s never gets smaller and your system never gets more secure.

Reachability analysis might give us some relief from this but I’ve yet to see if actually provide benefits to triaging vulnerabilities.

Anyways on to the actual practical bits, this post is going to examine short term solutions for JavaScript and Python in a very compressed manner. I will take a look at other languages in future posts.

Pinning dependencies

Dependencies are typically specified within two key file types, manifest files and lock files. Manifest files package.json, go.mod) typically contain the list of direct dependencies e.g. ones you have explicitly installed via npm install or pip install . Lock files on the other hand contain not only the direct dependencies but they typically also contain indirect or transitive dependencies as well as some form of cryptographic verification information (largely used to reduce downloading things that haven’t changed or preventing corruption).

The dependencies within manifest files are declared typically using a constraint format such as package_name@>1.2.3 this would indicate that we are seeking to install the dependency package_name with a version greater than 1.2.3 from a package repository.

Version tags as represented within most dependency repositories once published are typically available forever. There are circumstances where a package may need to be removed (such as cases of supply chain compromise) and the behaviour of the registries vary. You can see a breakdown of behaviours below.

NPM

Response Code: 404

Removal Policy: Limited unpublishing (24-72 hours after publish) plus other conditions

Alternative Actions: Tombstone/placeholder for established packages

PyPi

Response Code: 404

Removal Policy: Authors can delete releases but not entire projects easily

Alternative Actions: "Yanked" releases - hidden but metadata visible

Maven Central

Response Code: Never returns 404

Removal Policy: Never deletes - core immutability principle

Alternative Actions: Deprecation warnings only

RubyGems

Response Code: 404

Removal Policy: Complete removal possible but discouraged

Alternative Actions: "Yank" versions - hidden from search

Cargo

Response Code: Normal response with warning

Removal Policy: Cannot delete once published

Alternative Actions: "Yank" versions - unavailable for new projects

NuGet

Response Code: 404

Removal Policy: Complete deletion allowed for package owners

Alternative Actions: "Unlist" packages - hidden from search

We can see that some registries actually don’t have facilities for removal whatsoever rather they rely upon the behaviour of the package manager itself.

These behaviours combined with the typical constraint definition within manifest files create the first component of supply chain risk.

Going back to the package_name@>1.2.3 example. When all is working, a maintainer publishes 1.2.4 and you run npm install which ultimately results in you getting v1.2.4 of package_name. What happens if (like recent compromises) a bad actor publishes v.1.2.5 ? Well next time you run npm install you’re getting the bad version and its game over.

How do we fix this?

Provide strict constraints

Define your dependencies using explicit constraints rather than wide constraints. For example you should specify [email protected] rather than package_name@>1.2.3

This only protects your direct dependencies, unfortunately most dependencies have dependencies and they may not specify their dependencies in a manner that is as strict as yours.

Use deterministic operating modes

The largest cause of frustration and introducer of risk within dependency managers is when they operate in a non-deterministic manner, NPM is a great example of this behaviour:

Running npm install results in an interesting set of behaviours

Install performs a full dependency resolution which makes it slow. This process is necessary if you don’t already have a

package-lock.jsonbut is entirely superfluous if you do.If dependencies (either direct or indirect) have loose constraints

installwill update them transparently without prompting. This is fine if you intend it, but can be a disaster when security is the aim.

Arguably this violates the entire point of a lock file but hey.

NPM offers an alternative install mode called ci which is designed to install exactly what is within the package-lock.json and will fail if it doesn’t match or can’t be installed.

So for NPM people:

Developers: Use

npm installwhen adding or upgrading dependencies, then commitpackage-lock.json. Afterwards usenpm ciCI/CD & Production: Always use

npm cito guarantee a clean, reproducible install.

I’ve highlighted similar operating modes for other dependency tools within the Javascript/Python ecosystem.

Package Manager | npm ci Equivalent | Deterministic |

|---|---|---|

yarn |

| ✅ Yes |

pnpm |

| ✅ Yes |

bun |

| ✅ Yes |

pip |

| ⚠️ Limited (External tool) |

Poetry |

| ✅ Yes |

Pipenv |

| ✅ Yes |

uv |

| ✅ Yes |

Pythonistas: I recommend the use of Astral’s uv dependency manager. It’s just all around better than any other dependency manager from a speed and safety perspective.

Js/Ts Developers: I recommend the use of bun or pnpm. Again these are just all around better than the other options.

Use deterministic operating modes of dependency managers combined with explicit version constraints to ensure you aren’t getting the latest versions of dependencies without explicitly seeking them.

Disabling or limiting lifecycle scripts

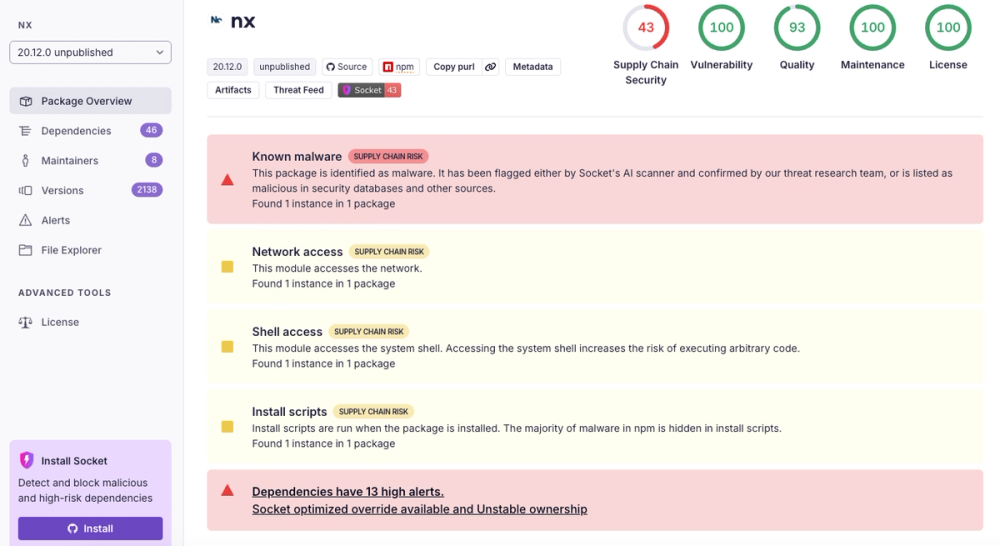

A common feature of dependency managers is their ability to run scripts through the lifecycle of the package install process e.g. pre/post install. These are also an extremely common attack path for malware authors as they tend to be overlooked by security tooling. Sources for numbers below will be provided in a research doc.

Javascript

Total Packages: 2.5M+

Lifecycle Hooks: 2.2% use install scripts (~55,000)

Malicious Usage: 0.9-1.3%

The Javascript ecosystem is the clearest case for disabling lifecycle hook scripts, several modern dependency managers such as bun and pnpm actually do this by default. They require you to explicitly enable each lifecycle hook for each dependency that has them. If switching dependency managers isn’t possible you are best served by disabling them via the npm ci --ignore-scripts command. If you’re in the rare situation where you need a script it is best to look at moving to bun or pnpm.

Python

Total Packages: 530K+

Lifecycle Hooks: ~33% use setup.py (~175,000)

Malicious Usage: 0.06-0.2%

The Python ecosystem is a little less clear, unfortunately there isn’t a direct guaranteed means of disabling pre-install scripts nor does the data really support it. There is the ability if you’re operating with a relatively well supported set of dependencies to force the installation of binary wheel formats rather than building anything from source.

Python as an interpreted language relies heavily upon system libraries get a lot of its work done. Some of this code needs to be built for specific systems but in most cases the interfaces they interact with are common enough across a set of systems (or the dependencies license permissive enough) that they can be pre-compiled and distributed as binary that will run just work. In the Python ecosystem these are called wheels a wheel is basically zip file that contains the python source as well as the binary extensions.

Using wheels means you don’t execute the setup.py script which closes down a vector of risk. You can force pip and uv to attempt to only use wheels by passing the --only-binary=all command. This will fail if a dependency you’re using doesn’t have a wheel though. In pip you can also pass a list of dependencies that you want installed only from wheel so this allows you to find the ones that have setup.py scripts and specify them. Luckily uv is just plain better and allows you to pass --no-binary=some-other-package package_name as well, so you can specify exactly the dependencies to install from source and just audit those for risk. If you combine this with the previous suggestion of dependency pinning you are narrowing the window of risk down to when you legitimately update the dependencies that you allow pre-install scripts to run in during a supply chain attack.

Disable lifecycle scripts within the Javascript and Python ecosystems to limit your exposure to malware.